Neocloud is not a term from the Matrix movies. It’s not a product. It’s not a technology. It’s not a service.

The neoclouds are vendors that offer GPU processing-as-a-service (GPUaaS). Some are startups. Some began aggregating GPUs for crypto-mining and transitioned to GPUaaS. There are some whose primary business is building and operating data centers.

They are all hoping to capitalize on the surging demand for AI, machine learning, data analytics and other use cases that require high-performance computing. They are also operating in a business climate in which venture capital is readily available for anything associated with AI.

Led by CoreWeave, Lambda Labs, Crusoe and Nebius, the neoclouds compete with the general-purpose hyperscalers (AWS, Azure, Google) that also offer GPUaaS. The difference is that the neoclouds are pure-play vendors that compete on price and technological specialization.

The global GPU-as-a-service market size was $3.23 billion in 2023 and is projected to grow to $49.84 billion by 2032, a 36% growth rate, according to Fortune Business Insights. (That estimate includes both hyperscalers and neoclouds.)

Who are the neoclouds?

Four vendors have emerged as leaders in the neocloud market, either based on the amount of capital raised, a successful IPO, or publicly reported revenue.

- CoreWeave: The undisputed leader of the neoclouds, CoreWeave has raised more than $7 billion. One of its investors is Nvidia, which gives it a pipeline to Nvidia’s GPUs. And CoreWeave has already had a successful IPO. In its first earnings report, the company’s quarterly revenue hit nearly $1 billion and CoreWeave predicts annual revenue of $5 billion. High-profile early customers include Microsoft, OpenAI, Google and Nvidia. It also offers object storage for AI workloads, as well as CPU clusters.

- Lambda Labs: Billing itself as the ‘AI developer cloud.’ Lambda Labs not only offers cloud GPUs, but it provides on-premises, private cloud GPU clusters that include Infiniband networking and storage. Lambda competes on price and ease of use, touting its ‘one-click clusters.’ Nvidia is also an investor.

- Crusoe: Crusoe’s edge is that its core business is building data centers, with an emphasis on sustainability and renewal energy. (Crusoe recently announced it has secured 4.5GW of natural gas to power its AI data centers.) Crusoe offers state-of-the-art GPU infrastructure, intelligent orchestration, and API-driven managed services. In December, the company raised $600 million.

- Nebius: Amsterdam-based Nebius, which owns a large data center in Finland (as well as plans for one in Missouri),offers full-stack AI infrastructure-as-a service. The company announced earnings results on May 20, with quarterly revenue at $55.3 million, and says it is on track for $750 million to $1 billion in annual recurring revenue.

Neoclouds vs. hyperscalers

Virtually every enterprise has a solid working relationship with at least one of the hyperscalers, and probably more than one, if we include Oracle and IBM. So, if they all offer GPU-as-a-service, what’s the rationale for going with an upstart neocloud?

According to Dr. Owen Rogers, senior research director for cloud computing at the Uptime Institute, the answer is simple: Price.

Aggregating numbers from the three hyperscalers and three neoclouds, Uptime calculates that the average hourly cost of an Nvidia DGX H100 instance when purchased on-demand from a hyperscaler was $98. When an approximately equivalent instance is purchased from a neocloud, the unit cost drops to $34, a 66% saving.

Rogers explains: “Neoclouds may benefit from lower costs because they do not need to maintain a wide variety of new and legacy infrastructure. Hyperscalers, on the other hand, provide a diverse range of CPUs, GPUs and specialized equipment for various use cases on a larger scale across infrastructure, platform and software services. Hyperscaler clouds typically offer dozens of products, with millions of individual line items for sale. In contrast, neoclouds provide only a handful of product lines, with variations in the tens. This focus allows them to operate with less diversely qualified staff, optimize a homogenous IT estate at scale, and reduce management overheads. These cost savings can then be passed on to customers as lower prices.”

Hyperscalers could, if those chose to, discount their own GPUaaS offerings to compete on price with the neoclouds, Rogers adds. But they don’t have to. Enterprises considering a neocloud have to weigh all the other factors that typically fall in favor of the incumbent.

Hyperscale data centers are secure, they are compliant with regulations, they provide a set of management and monitoring tools that are familiar to enterprise IT, and they don’t require negotiating a new contract with a new vendor.

“Hyperscalers have a captive incumbent user base. For many customers, paying more to use their existing hyperscaler for GPU instances is simpler than finding a cheaper alternative,” Rogers says.

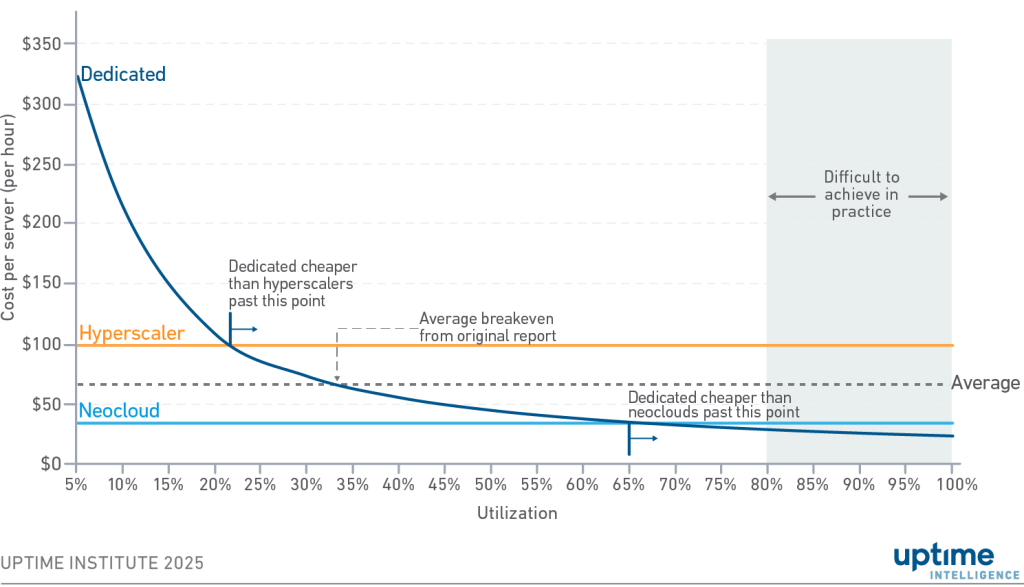

Cost per-server hour by utilization for hyperscaler versus neocloud

When using an established cloud provider, dedicated infrastructure is cheaper than the public cloud if average utilization levels are maintained at 22% or above, Uptime Institute reports. When using a neocloud, this figure rises to 66%.

Uptime Institute

Neoclouds vs. dedicated on-prem infrastructure

Uptime has also calculated the price differential between on-premises dedicated GPU infrastructure vs. hyperscaler vs. neocloud. Uptime determined that if an enterprise achieved and maintained 22% utilization of a dedicated infrastructure cluster over time, it would save money compared to a hyperscaler.

However, to save money vs. a neocloud, the enterprise would have to reach 66% utilization. “Breaching the 66% threshold would be difficult for all but the most efficient AI deployments. In this scenario, many enterprises would save money using a neocloud compared with deploying their own infrastructure,” according to Uptime.

Neocloud pros and cons

Neoclouds offer a variety of benefits in addition to price: Their data centers are state-of-the-art in terms of liquid cooling, Infiniband networking, the latest Nvidia GPUs, the scalability to handle enterprise requirements, and on-demand flexibility.

For enterprises just starting down the road to AI, neoclouds provide a way to experiment with AI without making a major on-prem data center investment. They also offer speed-to-market.

However, there are other factors to consider:

- Access to GPUs: In today’s AI gold rush climate, the demand for GPUs is outstripping supply. Not only are neoclouds competing with each other for the latest version of Nvidia’s chips, they are competing against both hyperscalers and enterprises. Customers need to make sure that the neocloud has a guaranteed pipeline of the newest GPUs.

- Race to the bottom: The neoclouds currently compete on price, so there’s a race to the bottom that is unsustainable. Already, prices have dropped from around $8 an hour per GPU to under $2 an hour. For example, Nebius offers an Nvidia H200 GPU at $3.50 an hour, an Nvidia H100 at $2.95 and an Nvidia L40S GPU at $1.55 an hour. Look for the neoclouds to begin offering additional services to augment their revenue stream.

- Vendor consolidation: As is typical in IT, companies compete and cooperate simultaneously. Nvidia, which has a chokehold on the GPU market (at least for now), is an investor in many of the neoclouds. Both Microsoft and Google are both hyperscalers and customers of CoreWeave. It’s likely that the market will begin picking winners and losers, and vendor consolidation will follow.

- AI-as-a-service: When enterprises work out their AI strategy, renting raw GPU compute power from neoclouds is one way to go. But that still requires that the enterprise perform the highly complex and technical work required to build and operate the AI application. Similar to the way that cloud services shifted from infrastructure-as-a-service to software-as-a-service, a number of vendors are offering AI-as-a-service, in which the vendor supplies pre-trained models that enabled enterprises to get their AI projects off the ground faster and more easily. All of the hyperscalers offer AI-as-a-service, as do vendors such as DataRobot, Debut InfoTech, C3.ai and Palantir.

Source:: Network World