Over the past few years, we’ve seen developers push the boundaries of what’s possible with real-time communication — tools for collaborative work, massive online watch parties, and interactive live classrooms are all exploding in popularity.

We use AI more and more in our daily lives. Text-based interactions are evolving into something more natural: voice and video. When users interact with the applications and tools that AI developers create, we have high expectations for response time and connection quality. Complex applications of AI are built on not just one tool, but a combination of tools, often from different providers which requires a well connected cloud to sit in the middle for the coordination of different AI tools.

Developers already use Workers, Workers AI, and our WebRTC SFU and TURN services to build powerful apps without needing to think about coordinating compute or media services to be closest to their user. It’s only natural for there to be a singular “Region: Earth” for real-time applications.

We’re excited to introduce Cloudflare Realtime — a suite of products to help you make your apps truly interactive with real-time audio and video experiences. Cloudflare Realtime now brings together our SFU, STUN, and TURN services, along with the new RealtimeKit.

Say hello to RealtimeKit

RealtimeKit is a collection of mobile SDKs (iOS, Android, React Native, Flutter), SDKs for the Web (React, Angular, vanilla JS, WebComponents), and server side services (recording, coordination, transcription) that make it easier than ever to build real-time voice, video, and AI applications. RealtimeKit also includes user interface components to build interfaces quickly.

The amazing team behind Dyte, a leading company in the real-time ecosystem, joined Cloudflare to accelerate the development of RealtimeKit. The Dyte team spent years focused on making real-time experiences accessible to developers of all skill levels, and had a deep understanding of the developer journey — they built abstractions that hid WebRTC’s complexity without removing its power.

Already a user of Cloudflare’s products, Dyte was a perfect complement to Cloudflare’s existing real-time infrastructure spanning 300+ cities worldwide. They built a developer experience layer that made complex media capabilities accessible. We’re incredibly excited for their team to join Cloudflare as we help developers define the future of user interaction for real-time applications as one team.

Interactive applications shouldn’t require WebRTC expertise

For many developers, what starts as “let’s add video chat” can quickly escalate into weeks of technical deep dives into WebSockets and WebRTC. While we are big believers in the potential of WebRTC, we also know that it comes with real challenges when building for the first time. Debugging WebRTC sessions can require developers to learn about esoteric new concepts such as navigating ICE candidate failures, TURN server configurations, and SDP negotiation issues.

The challenges of building a WebRTC app for the first time don’t stop there. Device management adds another layer of complexity. Inconsistent camera and microphone APIs across browsers and mobile platforms introduce unexpected behaviors in production. Chrome handles resolution switching one way, Safari another, and Android WebViews break in uniquely frustrating ways. We regularly see applications that function perfectly in testing environments fail mysteriously when deployed to certain devices or browsers.

Systems that work flawlessly with 5 test users collapse under the load of 50 real-world participants. Bandwidth adaptation falters, connection management becomes unwieldy, and maintaining consistent quality across diverse network conditions proves nearly impossible without specialized expertise.

What starts as a straightforward feature becomes a multi-month project requiring low-level engineering to solve problems that aren’t core to your business.

We realized that we needed to extend our products to client devices to help solve these problems.

RealtimeKit SDKs for Kotlin, React Native, Swift, JavaScript, Flutter

RealtimeKit is our toolkit for building real-time applications without common WebRTC headaches. The core of RealtimeKit is a set of cross-platform SDKs that handle all the low-level complexities, from session establishment and media permissions to NAT traversal and connection management. Instead of spending weeks implementing and debugging these foundations, you can focus entirely on creating unique experiences for your users.

Recording capabilities come built-in, eliminating one of the most commonly requested yet difficult-to-implement features in real-time applications. Whether you need to capture meetings for compliance, save virtual classroom sessions for students who couldn’t attend live, or enable content creators to archive their streams, RealtimeKit handles the entire media pipeline. No more wrestling with MediaRecorder APIs or building custom recording infrastructure — it just works, scaling alongside your user base.

We’ve also integrated voice AI capabilities from providers like ElevenLabs directly into the platform. Adding AI participants to conversations becomes as simple as a function call, opening up entirely new interaction models. These AI voices operate with the same low latency as human participants — tens of milliseconds across our global network — creating truly synchronous experiences where AI and humans converse naturally. Combined with RealtimeKit’s ability to scale to millions of concurrent participants, this enables entirely new categories of applications that weren’t feasible before.

The Developer Experience

RealtimeKit focuses on what developers want to accomplish, rather than how the underlying protocols work. Adding participants or turning on recording are just an API call away. SDKs handle device enumeration, permission requests, and UI rendering across platforms. Behind the scenes, we’re solving the thorny problems of media orchestration and state management that can be challenging to debug.

We’ve been quietly working towards launching the Cloudflare RealtimeKit for years. From the very beginning, our global network has been optimized for minimizing latency between our network and end users, which is where the majority of network disruptions are introduced.

We developed a Selective Forwarding Unit (SFU) that intelligently routes media streams between participants, dynamically adjusting quality based on network conditions. Our TURN infrastructure solves the complex problem of NAT traversal, allowing connections to be established reliably behind firewalls. With Workers AI, we brought inference capabilities to the edge, minimizing latency for AI-powered interactions. Workers and Durable Objects provided the WebSockets coordination layer necessary for maintaining consistent state across participants.

SFU and TURN services are now Generally Available

We’re also announcing the General Availability of our SFU and TURN services for WebRTC developers that need more control and a low-level integration with the Cloudflare network.

SFU now supports simulcast, a very common feature request. Simulcast allows developers to select media streams from multiple options, similar to selecting the quality level of an online video, but for WebRTC. Users with different network qualities are now able to receive different levels of quality, either automatically defined by the SFU or manually selected.

Our TURN service now offers advanced analytics with insight into regional, country, and city level usage metrics. Together with Custom Identifiers, and revocable tokens, Cloudflare’s TURN service offers an in-depth view into usage and helps avoid abuse.

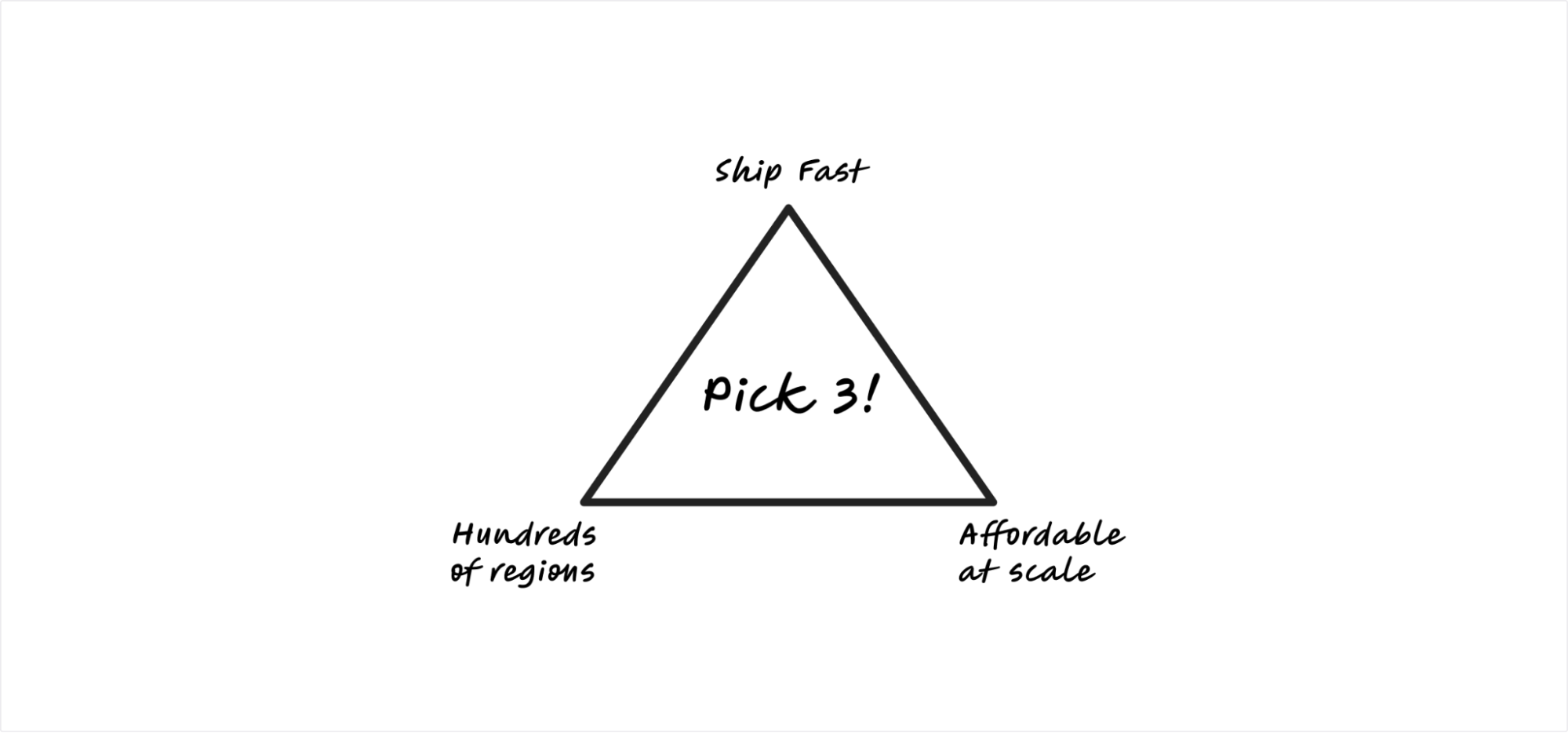

Our SFU and TURN products continue to be one of the most affordable ways to build WebRTC apps at scale, at 5 cents per GB after 1,000 GB of free usage each month.

Partnering with Hugging Face to make realtime AI communication seamless

FastRTC is a lightweight Python library from Hugging Face that makes it easy to stream real-time audio and video into and out of AI models using WebRTC. TURN servers are a critical part of WebRTC infrastructure and ensure that media streams can reliably connect across firewalls and NATs. For users of FastRTC, setting up a globally distributed TURN server can be complex and expensive.

Through our new partnership with Hugging Face, FastRTC users now have free access to Cloudflare’s TURN Server product, giving them reliable connectivity out of the box. Developers get 10 GB of TURN bandwidth each month using just a Hugging Face access token — no setup, no credit card, no servers to manage. As projects grow, they can easily switch to a Cloudflare account for more capacity and a larger free tier.

This integration allows AI developers to focus on building voice interfaces, video pipelines, and multimodal apps without worrying about NAT traversal or network reliability. FastRTC simplifies the code, and Cloudflare ensures it works everywhere. See these demos to get started.

Ship AI-powered realtime apps in days, not weeks

With RealtimeKit, developers can now implement complex real-time experiences in hours. The SDKs abstract away the most time-consuming aspects of WebRTC development while providing APIs tailored to common implementation patterns. Here are a few of the possibilities:

Video conferencing: Add multi-participant video calls to your application with just a few lines of code. RealtimeKit handles the connection management, bandwidth adaptation, and device permissions that typically consume weeks of development time.

Live streaming: Build interactive broadcasts where hosts can stream to thousands of viewers while selectively bringing participants on-screen. The SFU automatically optimizes media routing based on participant roles and network conditions.

Real-time synchronization: Implement watch parties or collaborative viewing experiences where content playback stays synchronized across all participants. The timing API handles the complex delay calculations and adjustments traditionally required.

Voice AI integrations: Add transcription and AI voice participants without building custom media pipelines. RealtimeKit’s media processing APIs integrate with your existing authentication and storage systems rather than requiring separate infrastructure.

When we’ve seen our early testers use the RealtimeKit, it doesn’t just accelerate their existing projects, it fundamentally changes which projects become viable.

Get started with RealtimeKit

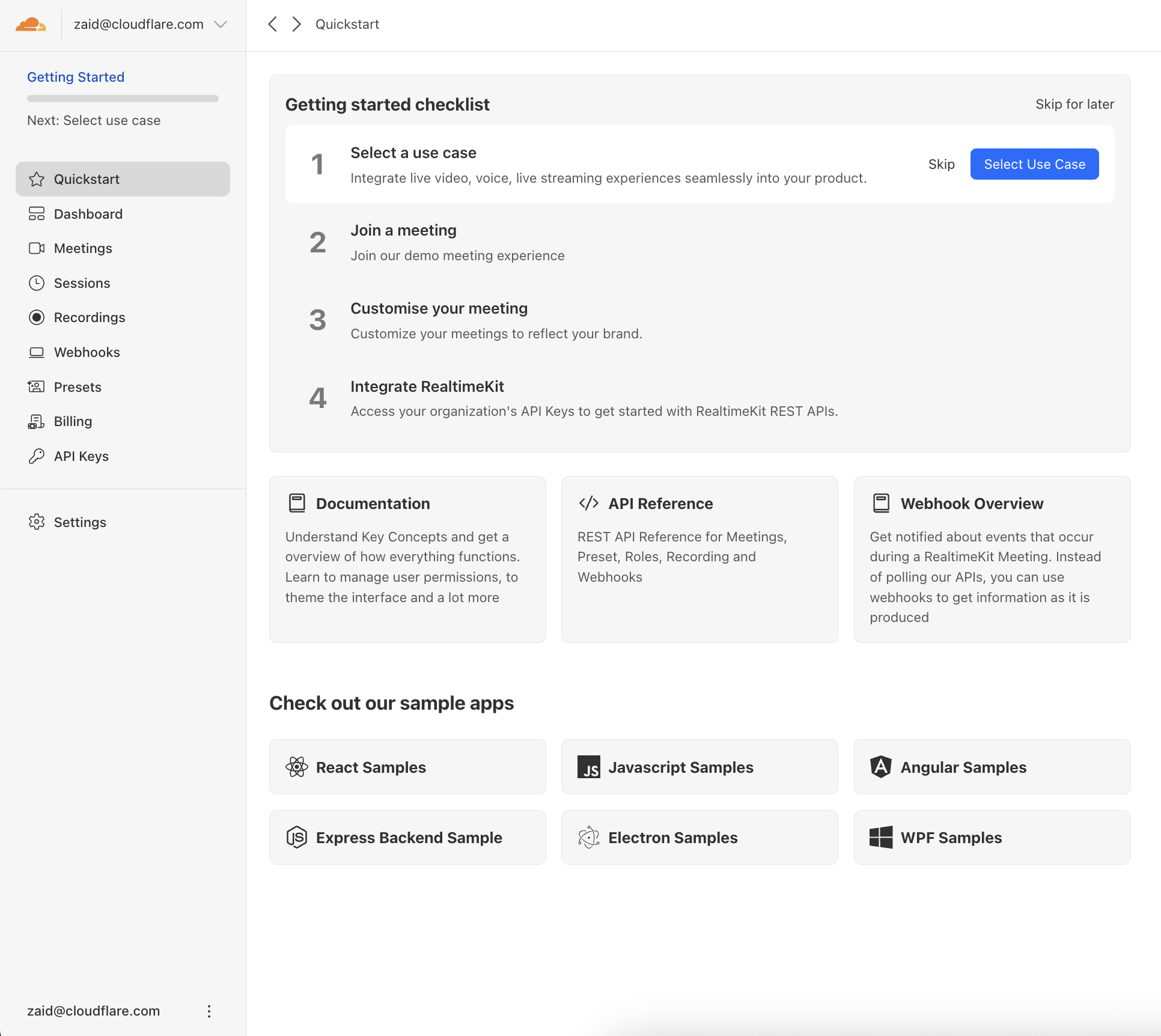

Starting today, you’ll notice a new Realtime section in your Cloudflare Dashboard. This section includes our TURN and SFU products alongside our latest product, RealtimeKit.

RealtimeKit is currently in a closed beta ready for select customers to start kicking the tires. There is currently no cost to test it out during the beta. Request early access here or via the link in your Cloudflare dashboard. We can’t wait to see what you build.

Source:: CloudFlare