Have you ever made a phone call, only to have the call cut as soon as it is answered, with no obvious reason or explanation? This analogy is the starting point for understanding connection tampering on the Internet and its impact.

We have found that 20 percent of all Internet connections are abruptly closed before any useful data can be exchanged. Essentially, every fifth call is cut before being used. As with a phone call, it can be challenging for one or both parties to know what happened. Was it a faulty connection? Did the person on the other end of the line hang up? Did a third party intervene to stop the call?

On the Internet, Cloudflare is in a unique position to help figure out when a third party may have played a role. Our global network allows us to identify patterns that suggest that an external party may have intentionally tampered with a connection to prevent content from being accessed. Although they are often hard to decipher, the ways connections are abruptly closed give clues to what might have happened. Sources of tampering generally do not try to hide their actions, which leaves hints of their existence that we can use to identify detectable ‘signatures’ in the connection protocol. As we explain below, there are other protocol features that are less likely to be spoofed and that point to third party actions. We can use these hints to build signature patterns of connection tampering that can be recognized.

To be clear, there are many reasons a third party might tamper with a connection. Enterprises may tamper with outbound connections from their networks to prevent users from interacting with spam or phishing sites. ISPs may use connection tampering to enforce court or regulatory orders that demand website blocking to address copyright infringement or for other legal purposes. Governments may mandate large-scale censorship and information control.

Despite the fact that everyone knows it happens, no other large operation has previously looked at the use of connection tampering at scale and across jurisdictions. We think that creates a notable gap in understanding what is happening in the Internet ecosystem, and that shedding light on these practices is important for transparency and the long-term health of the Internet. So today, we’re proud to share a view of global connection tampering practices.

The full technical details were recently peer-reviewed and published in “Global, Passive Detection of Connection Tampering” at ACM SIGCOMM, with its public presentation. We’re also announcing a new dashboard and API on Cloudflare Radar that shows a near real-time view of specific connection timeout and reset events – the two mechanisms dominant in tampering experienced by users connecting to Cloudflare’s network globally.

To better understand our perspective, it helps to understand the nature of connection tampering and reasons we’re talking about it.

Global insights for a global audience

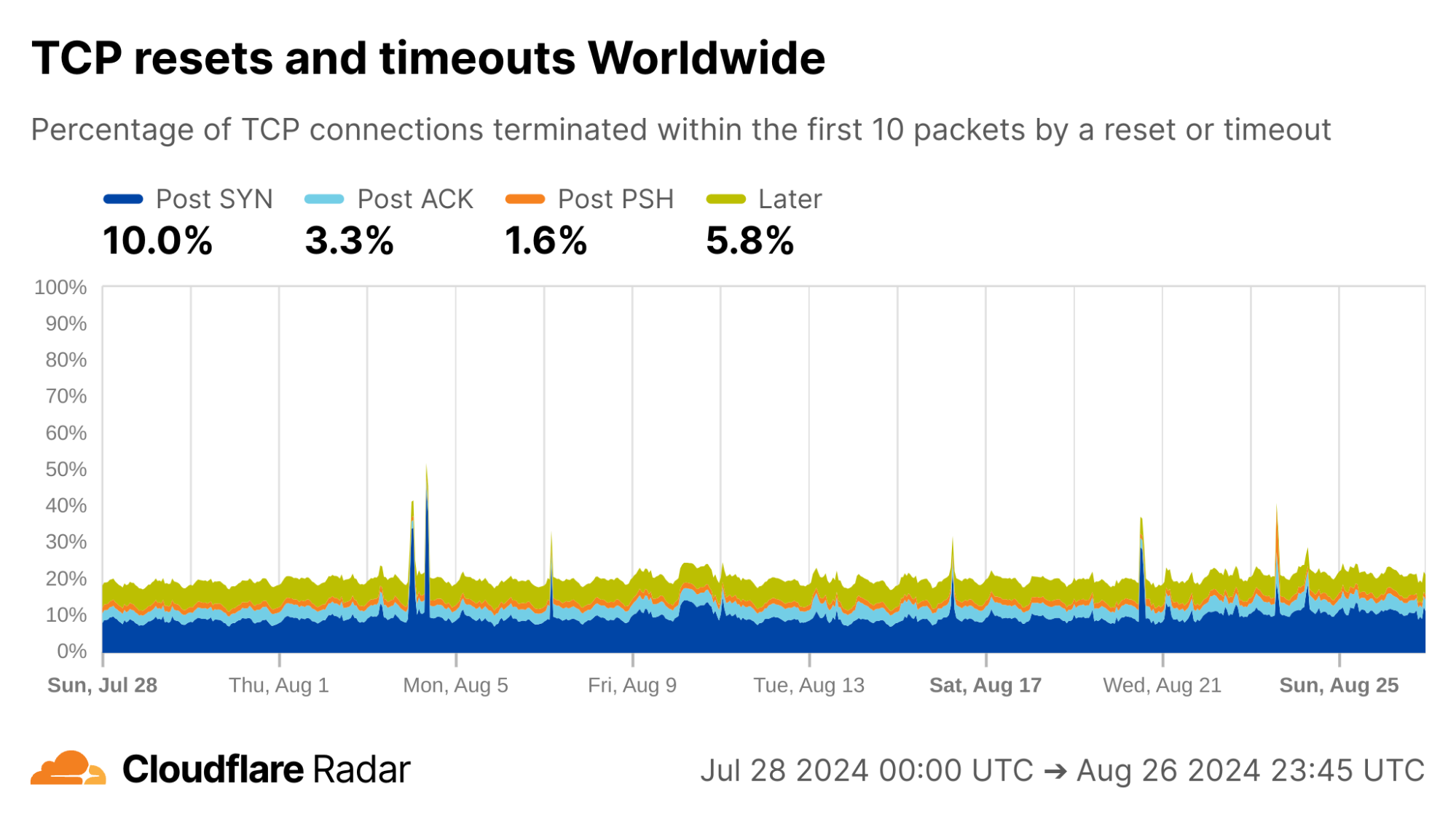

Evidence of connection tampering is visible in networks all around the world. We were initially shocked that, globally, about 20% of all connections to Cloudflare close unexpectedly before any useful data exchange occurs — consistent with connection tampering. Here is a snapshot of these anomalous connections seen by Cloudflare that, as of today, we’re sharing on Radar.

via Cloudflare Radar

It’s not all tampering, but some of it clearly is, as we describe in more detail below. The challenge is filtering through the noise to determine which anomalous connections can confidently be attributed to tampering.

Macro-level analysis and validation

In our work we identified 19 patterns of anomalous connections as being candidate signatures for connection tampering. From those, we found that 14 had been previously reported by active “on the ground” measurement efforts, which presented an opportunity for validation at macro-level: If we observe our tampering signatures from Cloudflare’s network in the same places others observe them from the ground, we could have greater confidence that the signatures capture true cases of connection tampering when observed elsewhere, where there has been no prior reporting. To mitigate the risk of confirmation bias from looking where tampering is known to exist, we decided to look everywhere at the same time.

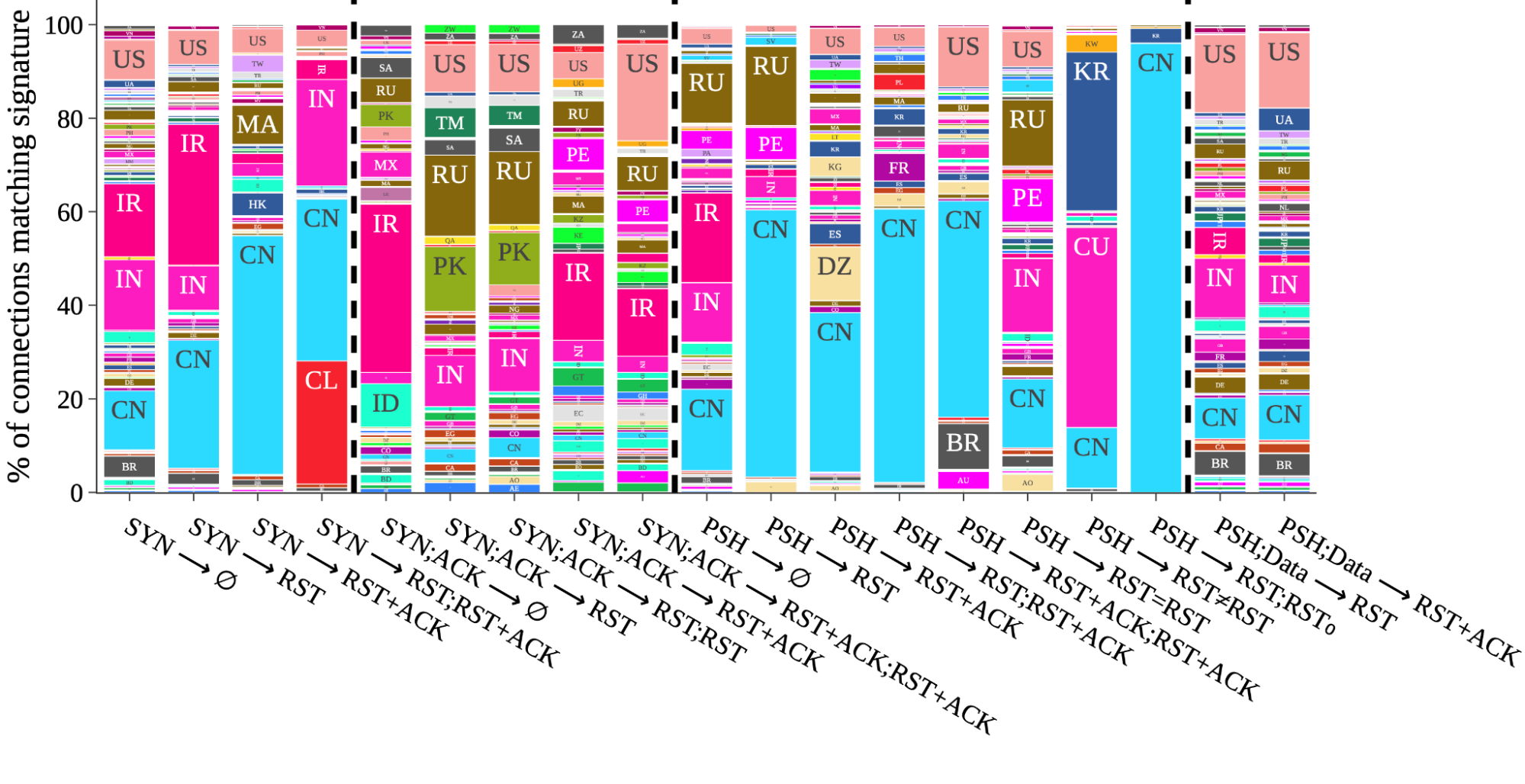

Taking that approach, the figure below, taken from our peer-review study, is a visual side-by-side comparison of each of the 19 signatures. The data is taken from a two-week interval starting January 26, 2023. Within each signature column is the proportion of matching connections broken down by the country where the connection originated. For example, the column third from the right labeled with ⟨PSH → RST;RST0⟩ indicates that we almost exclusively observed that signature on connections from China. Overall, what we find is a mirror of known cases from public and prior reports, which is an indication that our methodology works.

Figure 1: Signature matching across countries: Each column is the total global number of connections matching a specific signature. Within each column is the proportion of connections initiations from individual countries matching that signature.

Interestingly, by honing in on prevalence, and setting aside the raw number of signature matches, interesting patterns emerge. As a result of this data-driven perspective, unexpected macro-insights also emerge. If we focus on the three most populous countries in the world ranked by number of Internet users, connections from China contribute a substantial portion of matches across no fewer than 9 of the signatures. This is perhaps unsurprising, but reinforces prior studies that find evidence of the Great Firewall (GFW) being made of many different deployments and implementations of blocking mandates. Next, matches on connections from India also contribute substantially to nine 9 different signatures, five of which are in common with signatures where China matches feature highly. Looking at the third most populous, the United States, a visible if not substantial proportion of matches appear on all but two of the signatures.

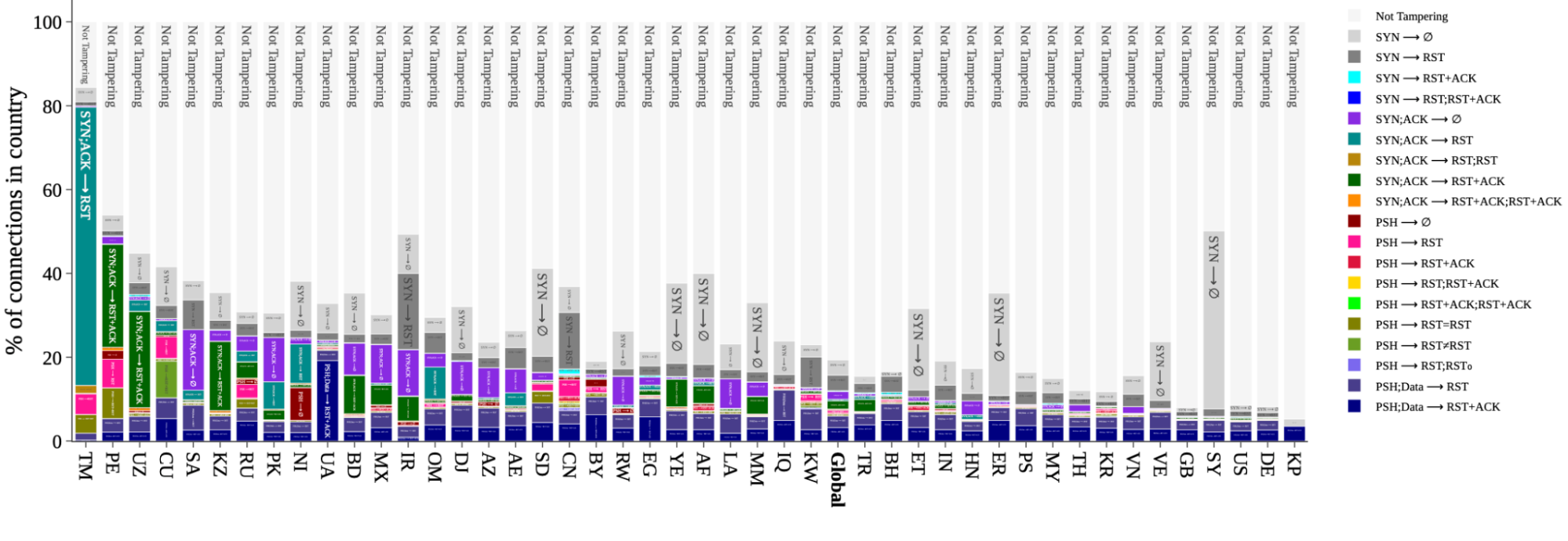

A snapshot of signature distributions per-country, also taken from the peer-review study, appears below for a select set of countries. The global distribution is included for comparison. The dark gray portions marked ⟨SYN → ∅⟩ are included for completeness, but have more non-tampering alternative explanations than the others (for example, as result of a low-rate SYN flood).

Figure 4: Signature distribution per country: The percentage of connections originating from select countries (and globally) that match a particular signature, or are not tampered with.

From this perspective we again observe patterns that match prior studies. We focus first on rates above the global average, and ignore the noisiest signature ⟨SYN → ∅⟩ in medium-gray; there are simply too many other explanations for a signature match at this earliest possible stage. Among all connections from Turkmenistan (TM), Russia (RU), Iran (IR), and China (CN), roughly 80%, 30%, 40%, and 30%, respectively, of those connections match a tampering signature. The data also reveals high signature match rates where no prior reports exist. For example, connections from Peru (PE) and Mexico (MX), match roughly 50% and 25%, respectively; analysis of individual networks in these countries suggests a likely explanation is zero-rating in mobile and cellular networks, where an ISP allows access to certain resources (but not others) at no cost. If we look below the global average, Great Britain (GB), the United States (US), and Germany (DE), each match a signature on about 10% of connections.

The data makes clear that connection tampering is widespread, and close to many users, if not most. In many ways, it’s closer than most know. To explain why, we explain connection tampering with a very familiar communication tool, the telephone.

Explaining tampering with telephone calls

Connection tampering is a way for a third party to block access to particular content. However, it’s not enough for the third party to know the type of content it wants to block. The third party can only block an identity by name.

Ultimately, connection tampering is possible only by accident – an unintended side effect of protocol design. On the Internet, the most common identity is the domain name. In a communication on the Internet, the domain name is most often transmitted in the “server name indication (SNI)” field in TLS – exposed in cleartext for all to see.

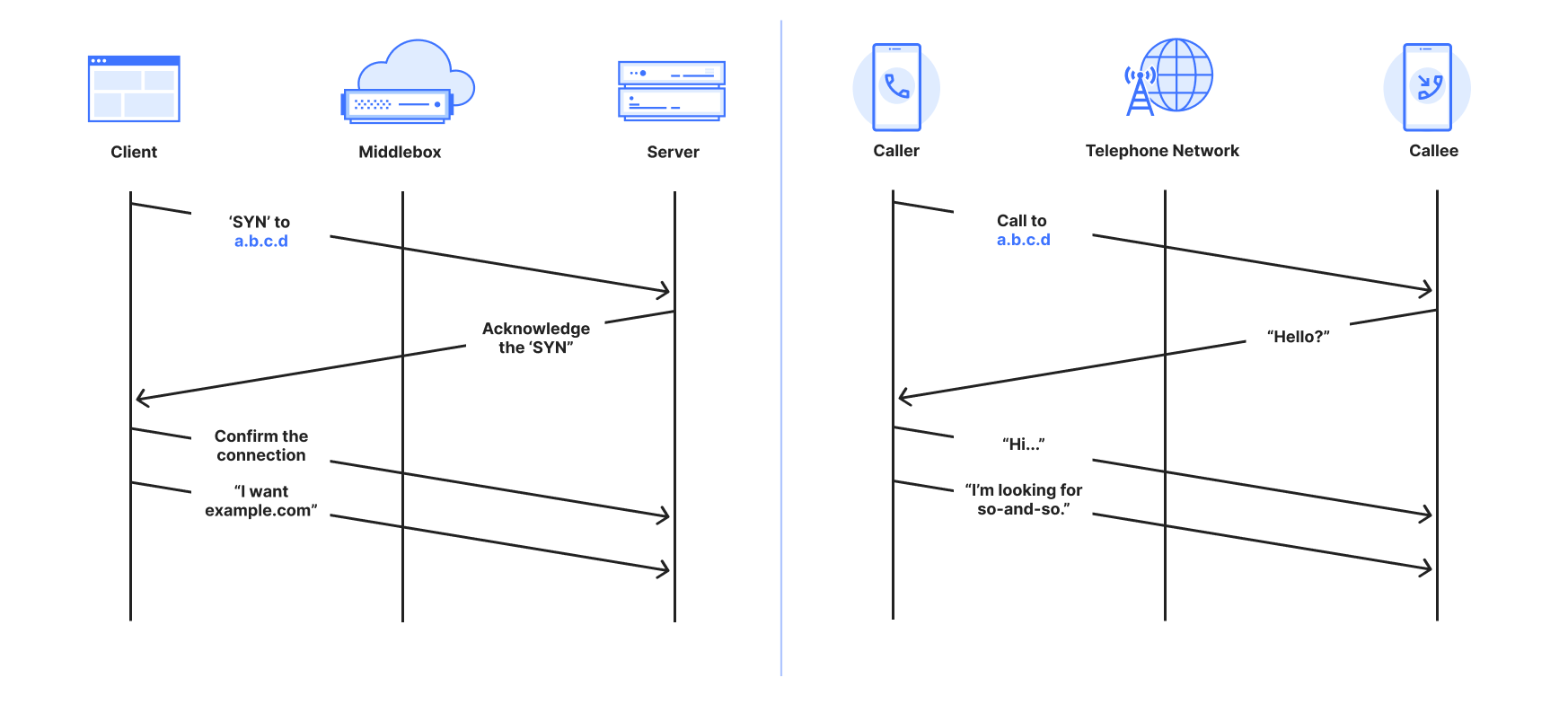

To understand why this matters, it helps to understand what connection tampering looks like in human-to-human communications without the Internet. The Internet itself looks and operates much like the postal system, which relies only on addresses and never on names. However, the way most people use the Internet is much more like the “plain old telephone system,” which requires names to succeed.

In the telephone system, a person first dials a phone number, not a name. The call is connected and usable only after the other side answers, and the caller hears a voice. The caller asks for a name only after the call is connected. The call manifests in the system as energy signals that do not identify the communicating parties. Finally, after the call ends, a new call is required to communicate again.

On the Internet, a client such as a browser “establishes a connection.” Much like a telephone caller, it initiates a connection request to a server’s number, which is an IP address. The longest-standing “connection-oriented” protocol to connect two devices is called the Transmission Control Protocol, or TCP. The domain name is transmitted in isolation from the connection establishment, much like asking for a name once the phone is answered. The connections are “logical” identified by metadata that does not identify communicating parties. Finally, a new connection is established with each new visit to a website.

Comparison between a TCP connection and a telephone call

What happens if a telephone company is required to prevent a call to some party? One option is to modify or manipulate phone directories so a caller can’t get the phone number they need to dial the phone that makes the call; this is the essence of DNS filtering. A second option is to block all calls to the phone number, but this inadvertently affects others, just like IP blocking does.

Once a phone call is initiated, the only way for the telephone company to know who is being called is to listen in on the call and wait for the caller to say, “is so-and-so there?” or “can I speak with so-and-so?” Mobile and cellular calls are no exception. The idea that the number we call is the person who will answer is just an expectation – it has never been the reality. For example, a parent could get a number to give to their child, or a taxi company could leave the mobile phone with whomever is on-shift at the time. As a result, the telephone company must listen in. Once it hears a certain name it can cut the call; neither side would have any idea what has happened – this is the very definition of connection tampering on the Internet.

For the purpose of establishing a communication channel, phone calls and TCP connections are at least comparable, and arguably exactly the same – not least because the domain name is transmitted separately from establishing a connection.

Similarly, on the Internet, the only way for a third party to know the intended recipient of a connection is to “look inside” of packets as they are transmitted. Where a telephone company would have to listen for a name, a third party on the Internet waits to see something it does not like, most often a forbidden name. Recall from above the unintended side-effect of the protocol: the name is visible in the SNI, which is required to help encrypt the data communication. When that happens, the third party causes one or both devices to close the connection by either dropping messages or injecting specially-crafted messages that cause the communicating parties to abort the connection.

The mechanisms to trigger tampering begin with deep packet inspection (DPI), which means looking into the data portions that lie beyond the address and other metadata belonging to the connection. It’s safe to say that this functionality does not come for free; whether it’s an ISP’s router or a parental proxy, DPI is an expensive operation that gets more expensive at large scale or high speed.

One last point worth mentioning is that weaknesses in telephone tampering similarly appear in connection tampering. For example, the sound of Jean and Gene are indistinguishable to any ear, despite being different names. Similarly, tampering with connections to Twitter’s short-form name “t.co” would also affect “microsoft.com” – and has.

A live view of tampering during Mahsa Amini protests

Before we delve deeply into the technical, there is one more motivation that is personal to many at Cloudflare. Transparency is important and the reason we started this work, but it was after seeing the data during the Mahsa Amini protests in Iran in 2022 that we committed internally to share the data on Radar.

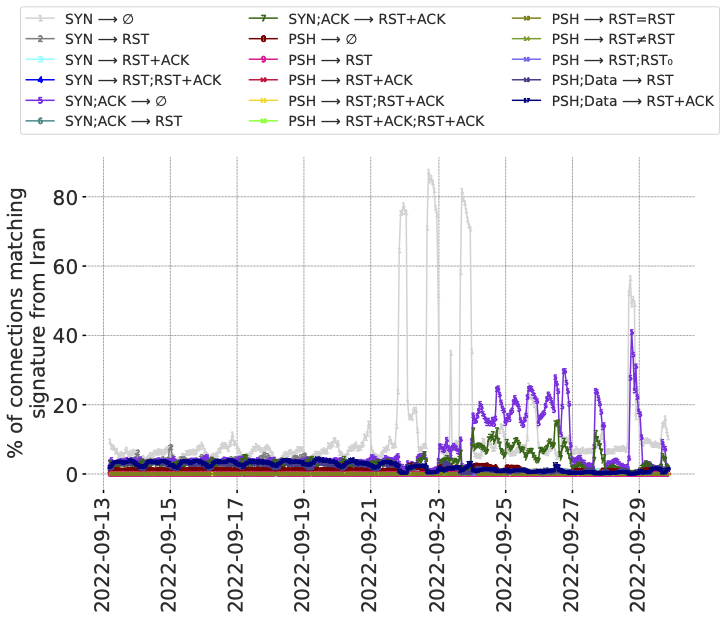

Figure 8: Signature match rates longitudinally in Iran during a period of nation-wide protests. (𝑥-axis is local time.)

The figure below is for connections from Iran during 17 days overlapping the protests. The plot-lines track individual signals of anomalous connections, including signatures of different types of connection tampering. This data pre-dates the Radar service, so we have elected to share this representation from the peer-reviewed paper. It was also the first visual example of the value of the data if it could be shared via Radar.

From the data there are two observations that stick out. First is the way that the lines appear stable before the protests, then increase after the protests began. Second is the variation between the lines over time, in particular the lines in light gray, dark purple, and dark green. Recall that each line is a different tampering signature, so the variation between lines suggests changes in the underlying causes – either the mechanisms at work, or the traffic that invokes them.

We emphasize that a signature match, alone, does not in itself mean there is tampering. However, in the case of Iran in 2022 there were public reports of blocking of various forms. The methods in use at the time, specifically Server Name Indication (SNI)-based blocking of access to content, had also previously been well-documented, and matched with our observations represented by the figure above.

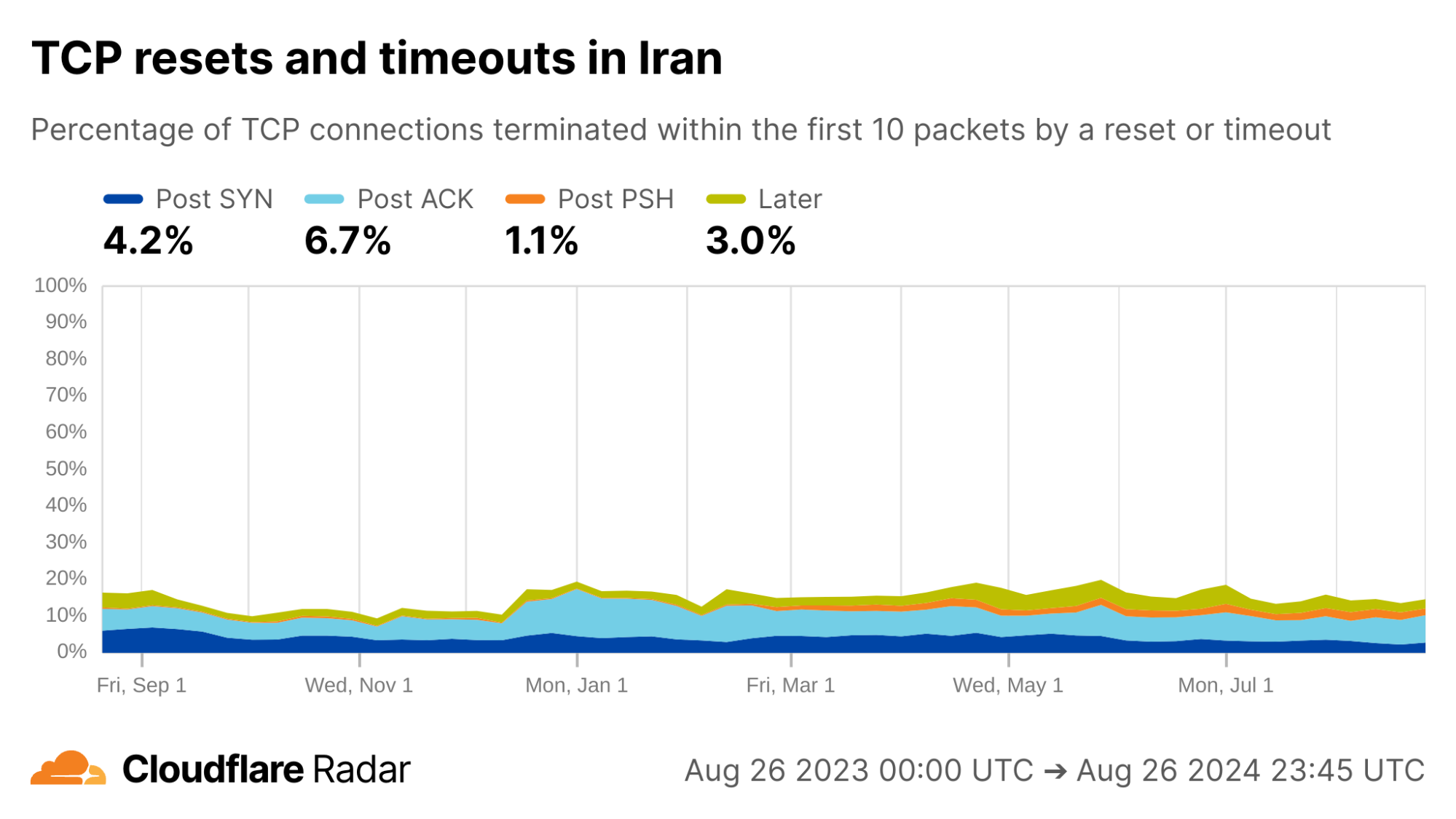

What about today? Below we see the Radar view of the twelve months from August 2023 to August 2024. Each color represents a different stage of the connection where tampering might happen. In the previous 12 months, TCP connection anomalies in Iran are lower than the worldwide averages, overall, but appear significantly higher in the portion of anomalies represented by the light-blue region. This “Post ACK” phase of communication is often associated with SNI-based blocking. (In the graph above, the relevant signatures are represented by the dark purple and dark green lines.) Alongside, the changing proportions of the different plot-lines since mid-December 2023 suggest that techniques have been changing over time.

via Cloudflare Radar

The importance of an open network measurement community

As a testament to the importance of open measurement and research communities, this work very literally “builds on the shoulders of giants.” It was produced in collaboration with researchers at the University of Maryland, École Polytechnique Fédérale de Lausanne, and the University of Michigan, but does not exist in isolation. There have been extensive efforts to measure connection tampering, most of which comes from the censorship measurement community. The bulk of that work consists of active measurements, in which researchers craft and transmit probes in or along networks and regions to identify blocking behavior. Unsurprisingly, active measurement has both strengths and weaknesses, as described in Section 2 in the paper).

The counterpart to active measurement, and the focus of our project, is passive measurement, which takes an “observe and do nothing” approach. Passive measurement comes with its own strengths and weaknesses but, crucially, it relies on having a good vantage point such as a large network operator. Each of active and passive measurements are most effective when working in conjunction, in this case helping to paint a more complete picture of the impact of connection tampering on users.

Most importantly, when embarking upon any type of measurement, great care must be taken to understand and evaluate the safety of the measurement since the risk imposed on people and networks are often indirect, or hidden from view.

Limitations of our data

We have no doubt about the importance of being transparent with connection tampering, but we also need to be explicit about the limits on the insights that can be gleaned from the data. As passive observers of connections to the Cloudflare network – and only the Cloudflare network – we are only able to see or infer the following:

Signs of connection tampering, but not where it happened. Any software or device between the client’s application and the server systems can tamper with a connection. The list ranges from purpose-built systems, to firewalls in the enterprise or home broadband router, and protection software installed on home or school computers. All we can infer is where the connection started (albeit at the limits of geolocation inaccuracies inherent in the Internet’s design).

(Often, but not always) What triggered the tampering, but not why. Typically, tampering systems are triggered by domain names, keywords, or regular expressions. With enough repetition, and manual inspection, it may be possible to identify the likely cause of tampering, but not the reasons. Many tampering system designs are prone to unintended consequences, among them the t.co example mentioned above.

Who and what is affected, but not who or what could be affected. As passive observers, there are limits on the kinds of inferences we can make. For example, observable tampering on 1000 out of 1001 connections to example.com suggests that tampering is likely on the next connection attempt. However, that says nothing about connections to another-example.com.

Data, data, data: Extracting signals from the noise

If you just want to get and use the data on Radar, see our “how to” guide. Otherwise, let’s understand the data itself.

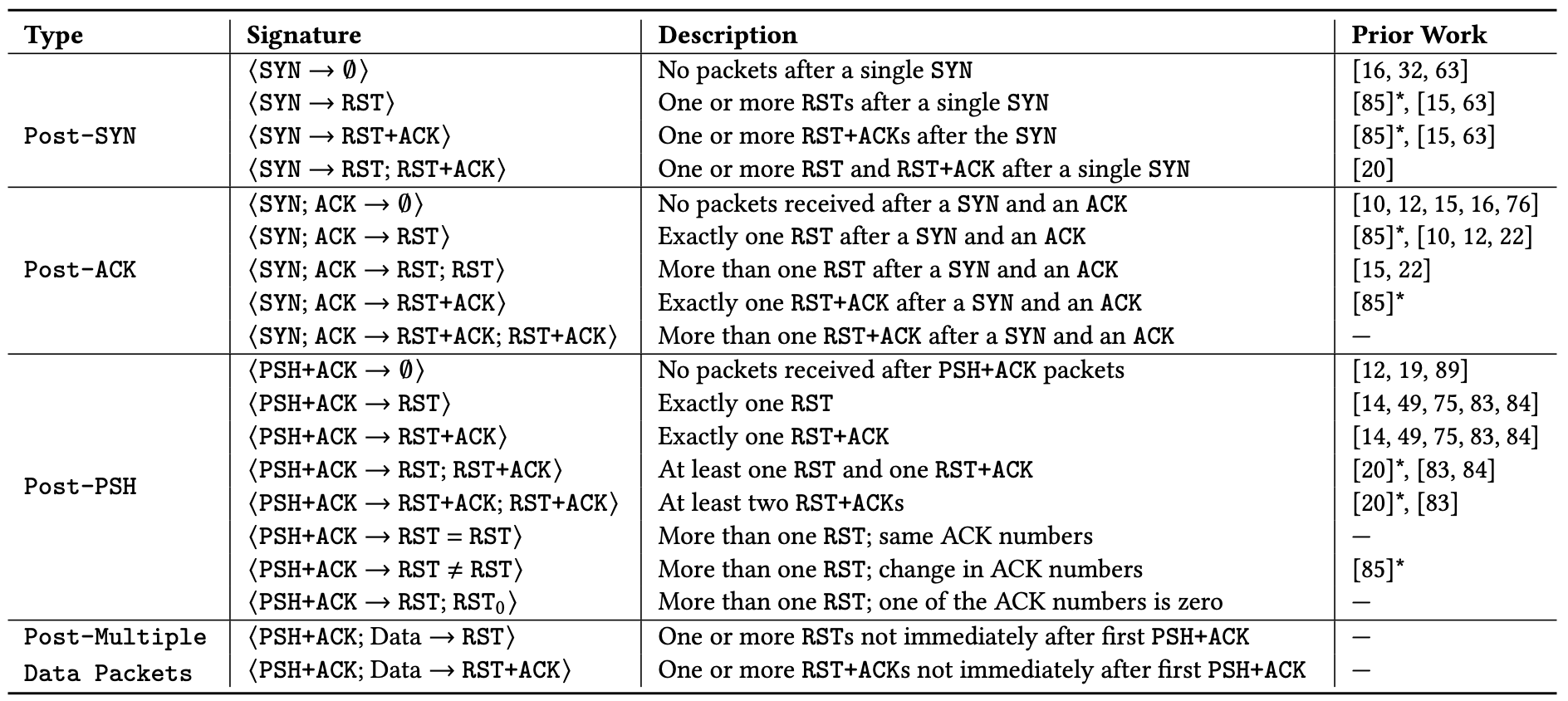

The focus of this work is TCP. In our data there are two mechanisms available to a third-party to force a connection to close: dropping packets to induce timeouts or injecting forged TCP RST packets, each with various deployment choices. Individual tampering signatures may be reflections of those choices. For comparison, a graceful TCP close is initiated with a FIN packet.

Connection tampering signatures

Our detection mechanism evaluates sets of packets in a connection against a set of signatures for connection tampering. The signatures are hand-crafted from signatures identified in prior work, and by analyzing samples of connections to Cloudflare’s network that we classify as anomalous – connections that close early, and ungracefully by way of a RST packet or timeout within the first 10 packets from the client. We analyzed the samples and found that 19 patterns accounted for 86.9% of all possibly tampered connections in the samples, shown in the table below.

Table 1: The comprehensive set of tampering signatures we identify through global passive measurements.

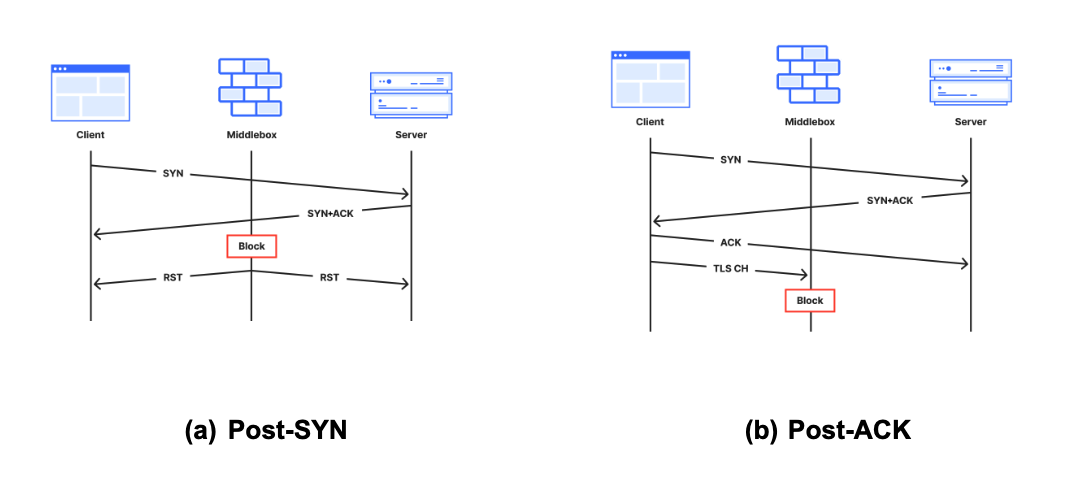

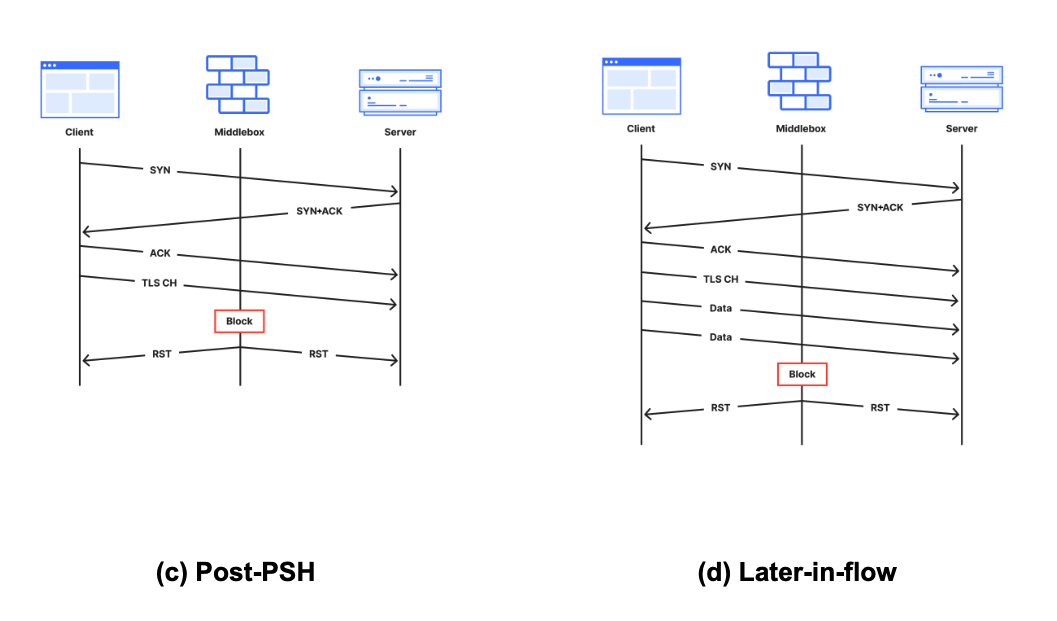

To help reason about tampering, we also classed the 19 signatures above according to the stage of the connection lifetime in which they appear. Each stage implies something about the middlebox, as described below alongside corresponding sequence diagrams:

(a) Post-SYN (mid-handshake): Tampering is likely triggered by the destination IP address because the middlebox has likely not seen application data, which is typically transmitted after the handshake completes.

(b) Post-ACK (immediately after handshake): The connection is established and immediately forced to close before seeing any data. It is possible, even likely, that the middlebox has likely seen a data packet; for example, the host header in HTTP or SNI field in TLS.

(c) Post-PSH (after first data packet): The middlebox has definitely seen the first data packet because the server has received it. The middlebox may have been waiting for a packet with a PSH flag, typically set to indicate data in the packet should be delivered to the application on receipt, without delay. The likely middlebox is likely a monster-on-the-side because it permits the offending packet to reach the destination.

(d) Later-in-flow (after multiple data packets): Tampering at later stages in the connection (not immediately after the first data packet, but still within the first 10 packets). The prevalence of encrypted data in TLS makes this the least likely stage for tampering to occur. The likely triggers are keywords appearing in cleartext later in (HTTP) connections, or by the likes of enterprise proxies and parental protection software that has visibility into encrypted traffic and can reset connections when certain keywords are encountered.

Accounting for alternative explanations

How can we be confident that the signatures above detect middlebox tampering, and not just atypical client behavior? One of the challenges of passive measurement is that we do not have full visibility into the clients connecting to our network, so absolute positives are hard if not impossible. Instead, we look for strong positive evidence of tampering, that must first begin by identifying false positives.

We are aware of the following sources of false positives that can be hard to disambiguate from true sources of tampering. All but the last occur in the first two stages of the connection, before data packets are received.

Scanners are client-side applications that probe servers to elicit responses. Some scanner software uses fixed bits in the header to self-identify, which helps us filter. For example, we found that Zmap accounts for approximately 1% of all

⟨SYN → RST⟩signature matches.SYN flood attacks are another likely source of false positives, especially for signatures in the Post-SYN connection stage like the

⟨SYN → ∅⟩and⟨SYN → RST⟩signatures. These are less likely to appear in our dataset collection, which happens after the DDoS protection systems.Happy Eyeballs is a common technique used by dual-stack clients in which the client initiates an IPv6 connection to the server and, with some delay to favor IPv6, also makes an IPv4 connection. The client keeps the connection that succeeds first and drops the other. Clients that cease transmission or close the connection with a RST instead of a FIN would show up in the data, matching the

⟨SYN → RST⟩signature.Browser-triggered RSTs may appear at any stage of the connection, but especially for signatures that match later in a connection (after multiple data packets). It might be triggered, for example, by a user closing a browser tab. Unlike targeted tampering, however, RSTs originating from browsers are unlikely to be biased towards specific services or websites.

How can we separate legitimate client-initiated false positives from third-party tampering? We seek an evidence-based approach to distinguish tampering signatures from other signals within the dataset. For this we turn to individual bits in the packet headers.

Signature validation – letting the data speak

Signature matches in isolation are insufficient to make good determinations. Alongside, we can find further supporting evidence of their accuracy by examining connections in aggregate – if the cause is tampering, and tampering is targeted, then there must be other patterns or markers in common. For example, we expect browser behavior to appear worldwide; however, as we showed above, signatures that match on connections in only some places or some time intervals stick out.

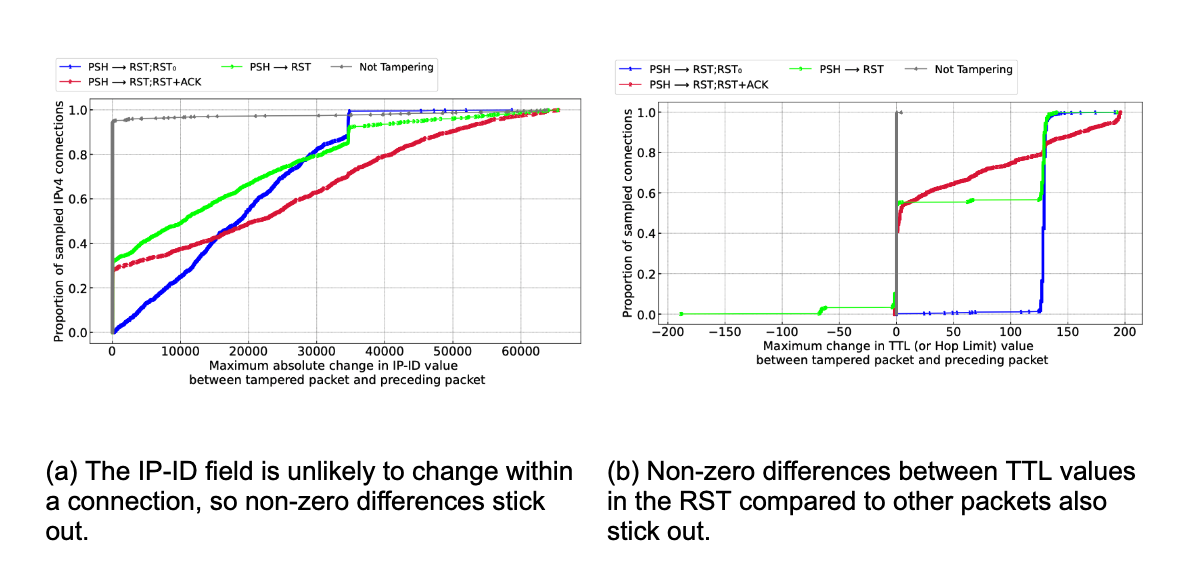

Similarly, we expect certain characteristics in contiguous packets within a connection to also stick out, and indeed they do, namely in the IP-ID and TTL fields in the IP header.

The IP-ID (IP identification) field in the IPv4 packet header is usually a fixed per-connection value, often incremented by the client for each subsequent packet it sends. In other words, we expect the change in IP-ID value in subsequent packets sent from the same client to be small. Thus, large changes in IP-ID value between subsequent packets are unexpected in normal connections, and could be used as an indicator of packet injection. This is exactly what we see in the figure above, marked (a), for a select set of signatures.

The Time-to-Live (TTL) field offers another clue for detecting injected packets. Here, too, most client implementations use the same TTL for each packet sent on a connection, usually set initially to either 64 or 128 and decremented by every router along the packet’s route. If a RST packet does not have the same TTL as other packets in a connection, it’s a strong signal that it was injected. Looking at the figure above, marked (b), we can see marked differences in TTLs, indicating the presence of a third party.

We strongly encourage readers to read the underlying details of how and why these make sense. Connections with high maximum IP-ID and TTL differences give positive evidence for traffic tampering, but the absence of these signals does not necessarily mean that tampering did not occur, as some middleboxes are known to copy IP header values including the IP-ID and TTL from the original packets in the connection. Our interest is in responsibly ensuring our dataset has indicative value.

There is one last caveat: While our tampering signatures capture many forms of tampering, there is still potential for false negatives for connections that were tampered with but escaped our detection. Some examples are connections terminated after the first 10 packets (since we don’t sample that far), FIN injection (a less common alternative to RST injection), or connections where all packets are dropped before reaching Cloudflare’s servers. Our signatures also do not apply to UDP-based protocols such as QUIC. We hope to expand the scope of our connection tampering signatures in the future.

Case studies

To get a sense of how this looks on the Cloudflare network, below we provide further examples of TCP connection anomalies that are consistent with OONI reports of connection tampering.

For additional insights from this specific study, see the full technical paper and presentation. For other regions and networks not listed below, please see the new data on Radar.

Pakistan

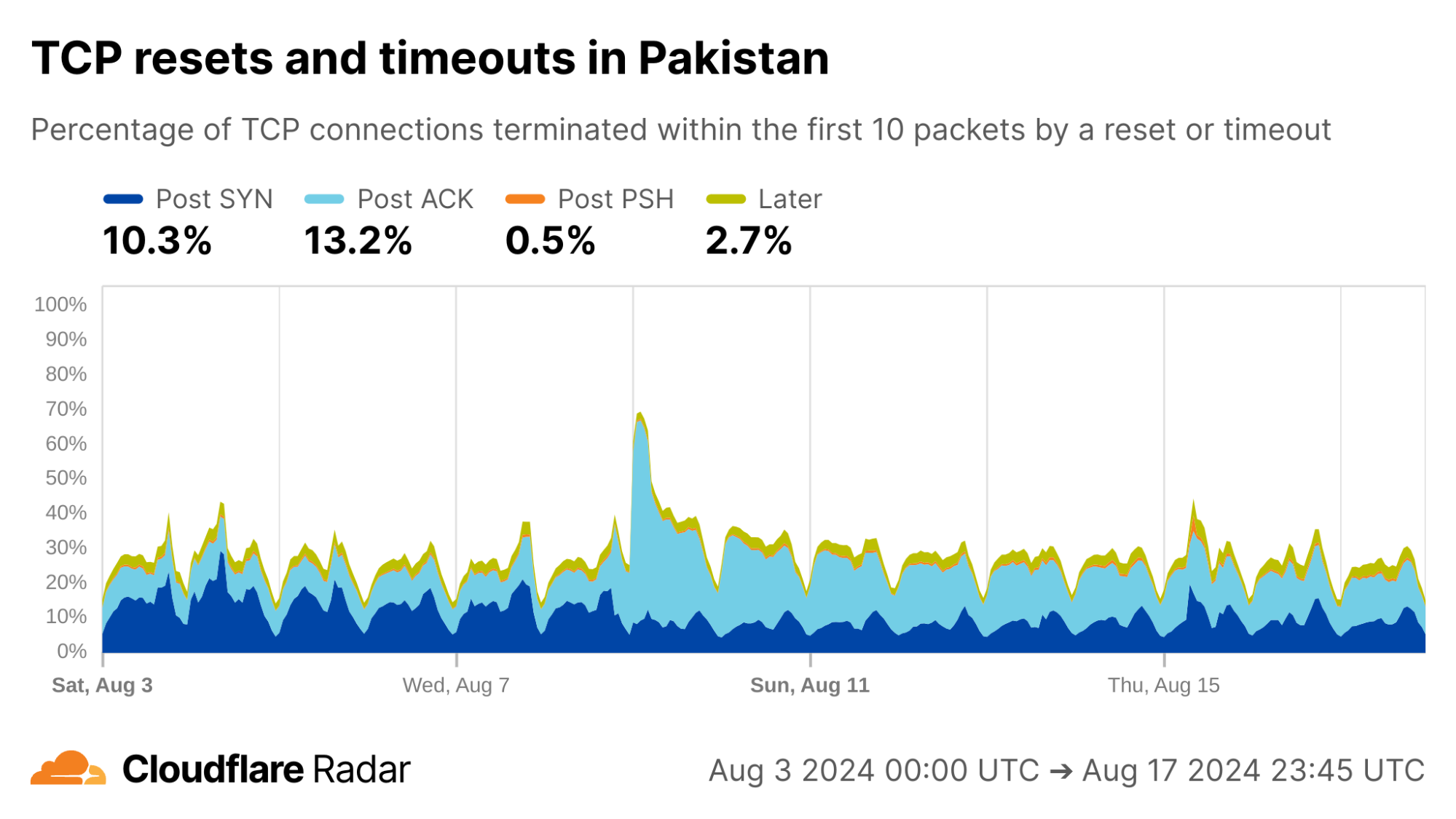

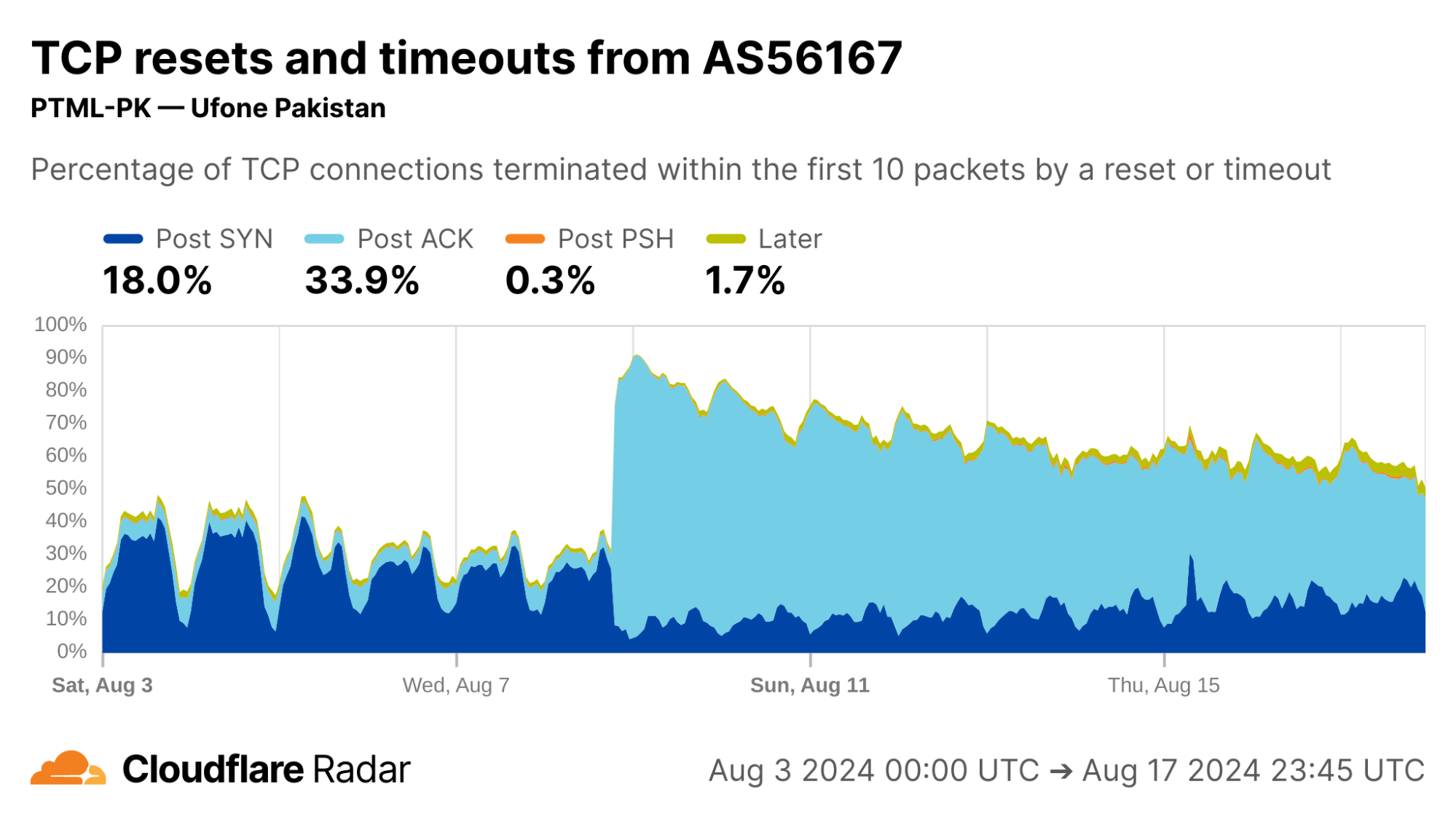

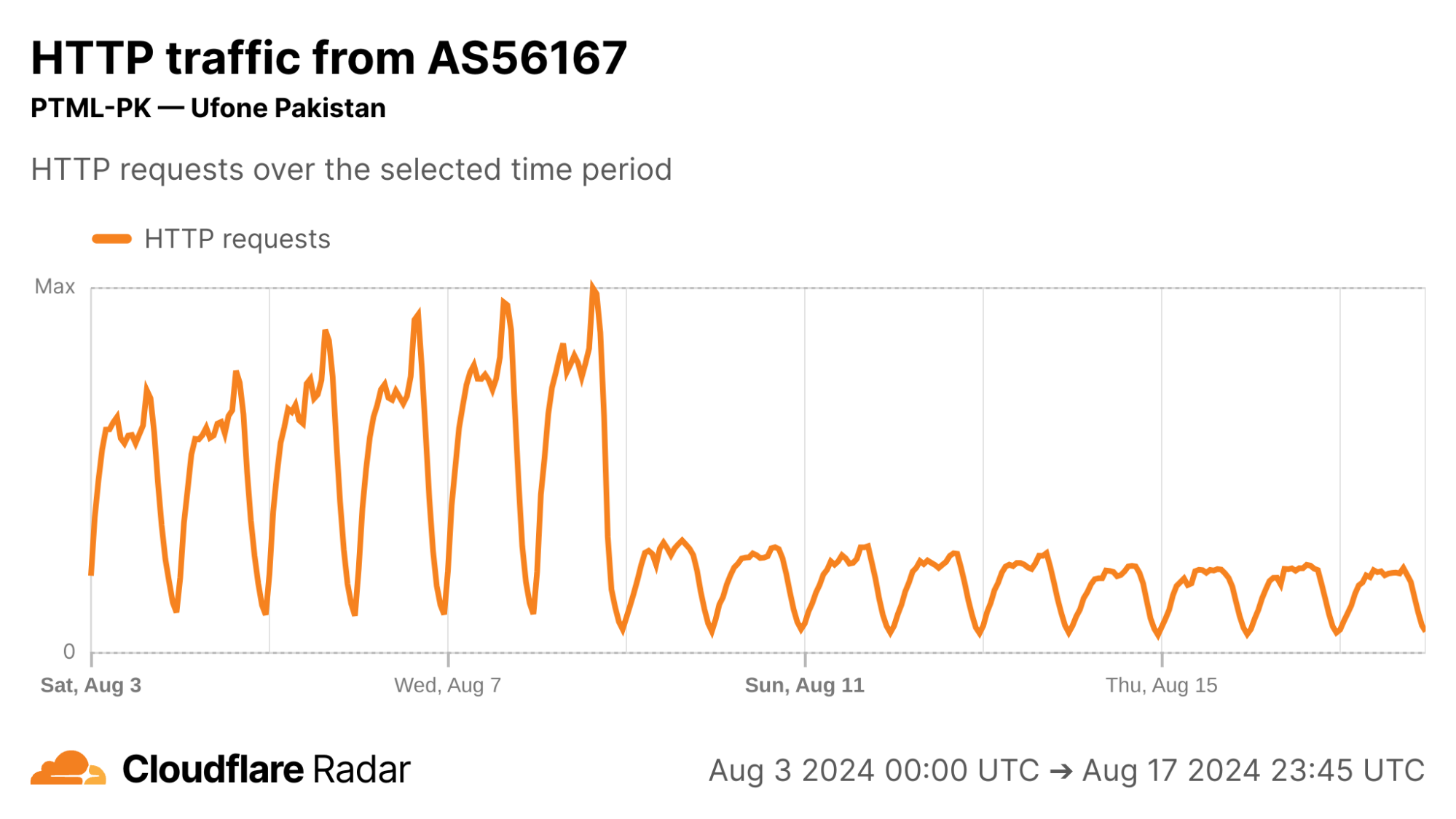

Reporting from inside Pakistan suggests changes in users’ Internet experience throughout August 2024. Taking a look at a two-week interval in early August, there is a significant shift in Post-ACK connection anomalies starting on August 9, 2024.

via Cloudflare Radar

The August 9 Post-ACK spike can be almost entirely attributed to AS56167 (Pak Telecom Mobile Limited), shown below in the first image, where Post-ACK anomalies jumped from under 5% to upwards of 70% of all connections, and has remained high since. Correspondingly, we see a significant reduction in the number of successful HTTP requests reaching Cloudflare’s network from clients in AS56167, below in the second image, which provides evidence that connections are being disrupted. This Pakistan example reinforces the importance of corroborating reports and observations, discussed in more detail in the Radar dataset release.

via Cloudflare Radar

via Cloudflare Radar

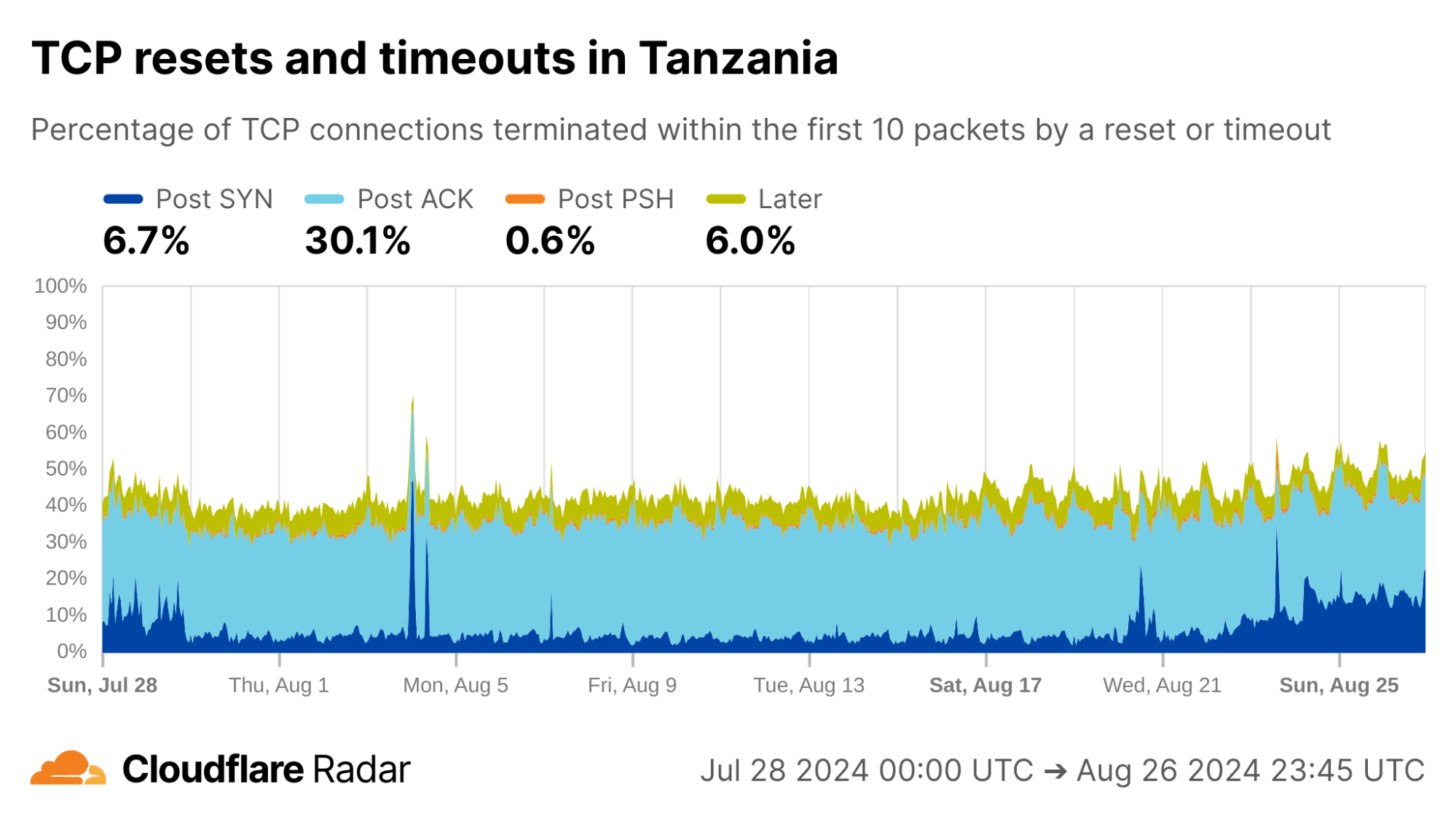

Tanzania

A OONI report from April 2024 discusses targeted connection tampering in Tanzania. The report states that this blocking is observed on the client side as connection timeouts after the Client Hello message during the TLS handshake, indicating that a middlebox is dropping the packet containing the Client Hello message. On the server side, connections tampered with in this way would appear as Post-ACK timeouts as the PSH packet containing the Client Hello message never reaches the server.

Looking at the Post-ACK data represented in the light-blue portion, below, we find matching evidence: close to 30% of all new TCP connections from Tanzania appear as Post-ACK anomalies. Breaking this down further (not shown in the plots below), approximately one third is due to timeouts, consistent with the OONI report above. The remainder is due to RSTs.

via Cloudflare Radar

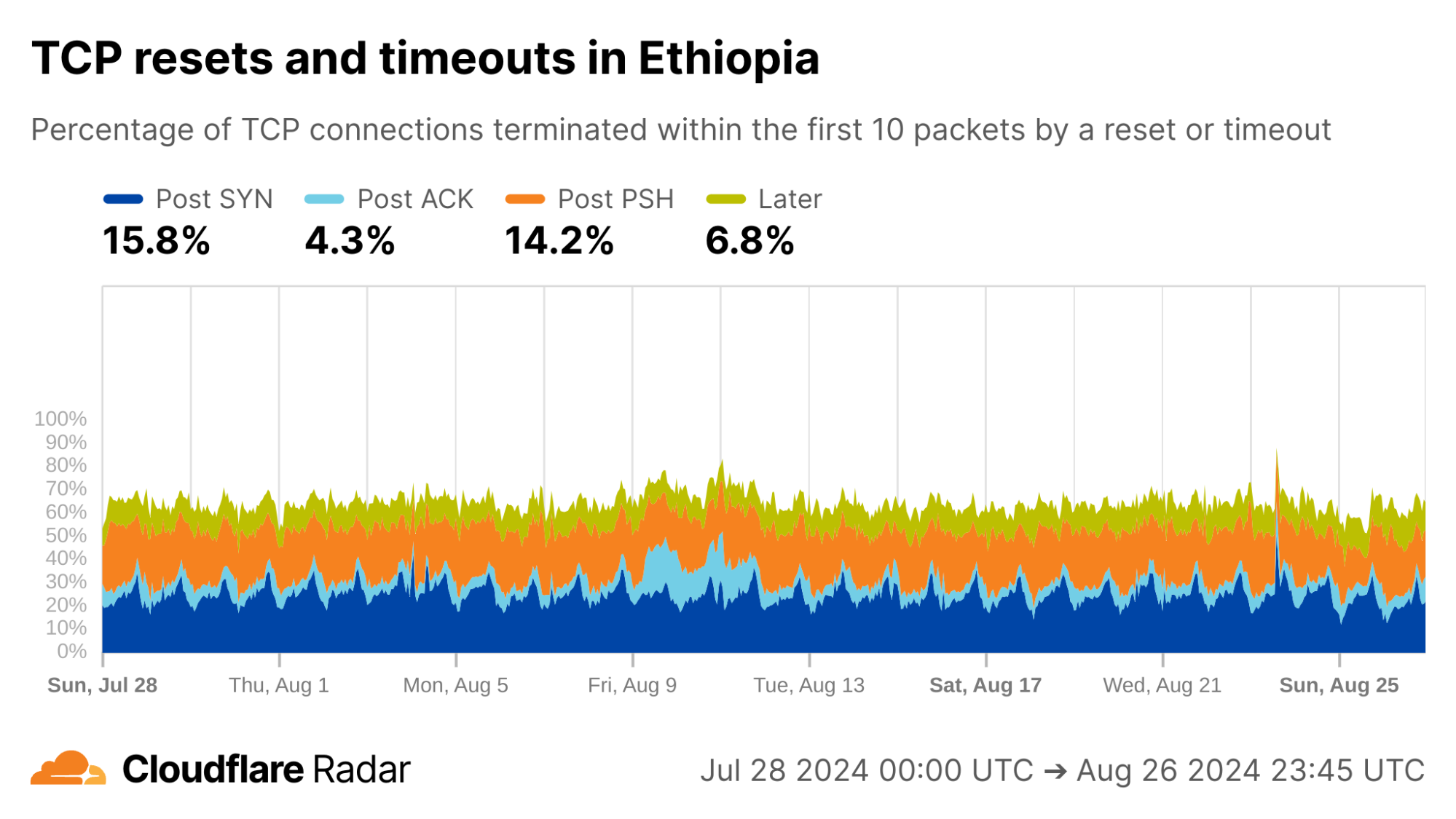

Ethiopia

Ethiopia is another location with previously-reported connection tampering. Consistent with this, we see elevated rates of Post-PSH TCP anomalies across networks in Ethiopia. Our internal data shows that the majority of Post-PSH anomalies in this case are due to RSTs, although timeouts are also prevalent.

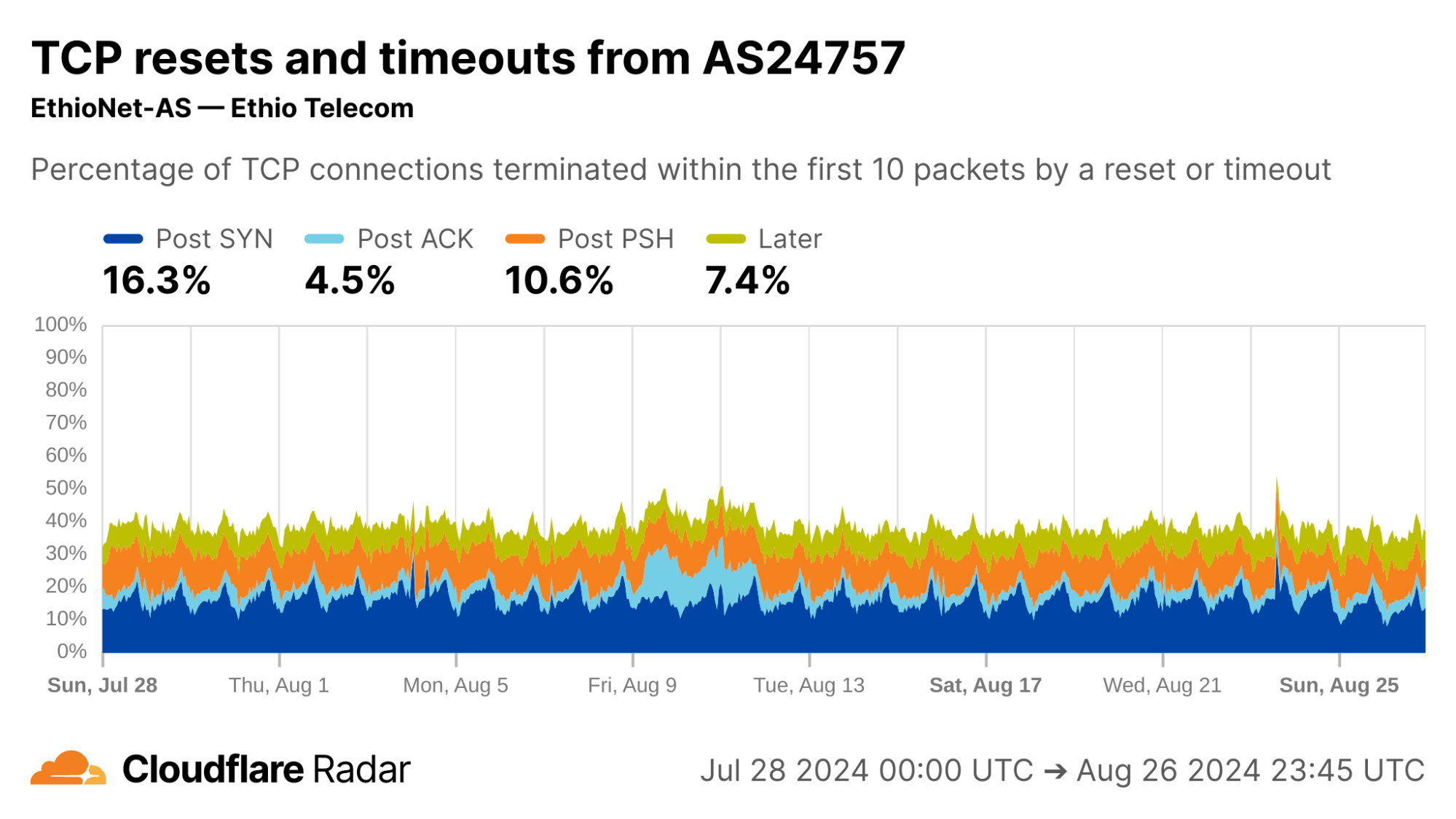

via Cloudflare Radar

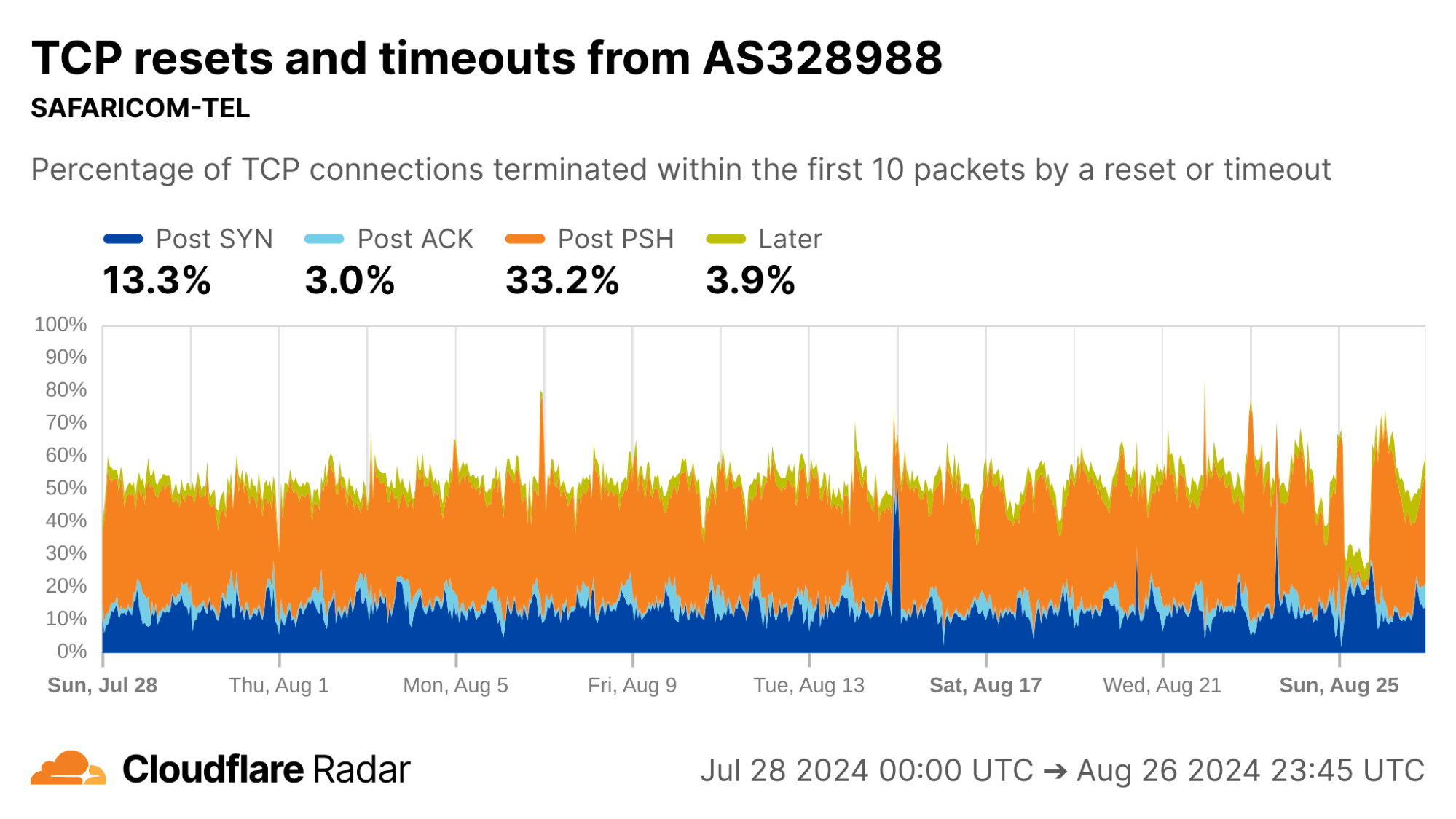

The majority of traffic arriving to Cloudflare’s servers from IP addresses geolocated in Ethiopia is from AS24757 (Ethio Telecom), shown below in the first image, so it is perhaps unsurprising that its data closely matches the country-wide distribution of connection anomalies. The number of Post-PSH connections originating from AS328988 (SAFARICOM TELECOMMUNICATIONS ETHIOPIA PLC), shown below in the second image, are higher in proportion and account for over 33% of all connections from that network.

via Cloudflare Radar

via Cloudflare Radar

Reflecting on the present to promote a resilient future

Connection tampering is a blocking mechanism that is deployed in various forms throughout the Internet. Although we have developed ways to help detect and understand it globally, the experience is just as individual as an interrupted phone call.

Connection tampering is also made possible by accident. It works because domain names are visible in cleartext. But it may not always be this way. For example, Encrypted Client Hello (ECH) is an emerging building block that encrypts the SNI field.

We’ll continue to look for ways to talk about network activity and disruption, all to foster wider conversations. Check out the newest additions about connection anomalies on Cloudflare Radar and the corresponding blog post, as well as the peer-reviewed technical paper and its 15-minute summary talk.

Source:: CloudFlare